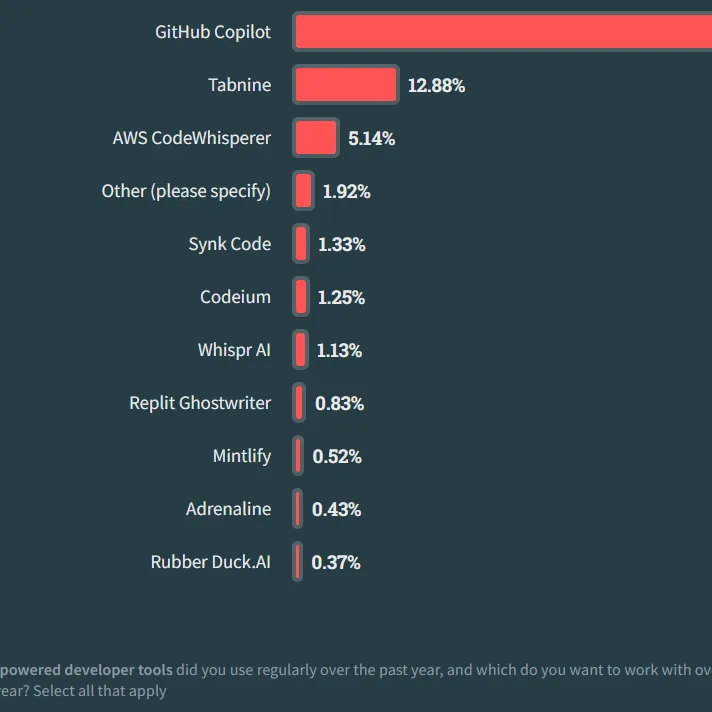

Recent evaluations of GitHub Copilot’s AI capabilities reveal mixed results, sparking discussions in the developer community about its efficacy in code generation. A test conducted by a developer, shared on ZDNet, highlights that while Copilot can assist in generating code snippets, it often fails to deliver quality output. The developer noted, ‘it’s just might be terrible at writing code,’ emphasizing the limitations when facing complex tasks. Additionally, a mega thread on iProgrammer elaborated on various user experiences with Copilot, noting features like its suggestion capabilities, but with caveats surrounding the quality of code generated. Users reported that Copilot can sometimes produce code that is ‘incomplete’ or ‘incorrect,’ raising questions about its reliability for critical coding projects. Moreover, the discussion points out the ongoing challenges faced by AI programming tools in understanding nuanced requests and requirements. In a related note, Visual Studio Magazine highlighted improvements in Visual Studio’s Copilot, mentioning enhanced feature search shortcuts and slash commands. Despite these advancements, the integration of AI in coding still presents hurdles that require careful consideration by developers. The community remains divided, with some embracing the technology while others prioritize traditional coding methods. The developments in AI tools, including GitHub Copilot, may redefine programming practices, but user skepticism remains prevalent as they navigate the balance between innovation and accuracy in software development.

GitHub Copilot’s Performance Under Scrutiny: A Comprehensive Analysis